The world of Artificial Intelligence is evolving at a breakneck pace. Transformer models are leading the charge in revolutionizing how machines understand and generate human language.

From advancements in model architecture to groundbreaking applications across various industries, transformer models have become the backbone of modern AI.

You must read this blog post for the latest transformer model development trends and explore the cutting-edge advancements & innovative practices that are pushing the boundaries of what is all possible in AI.

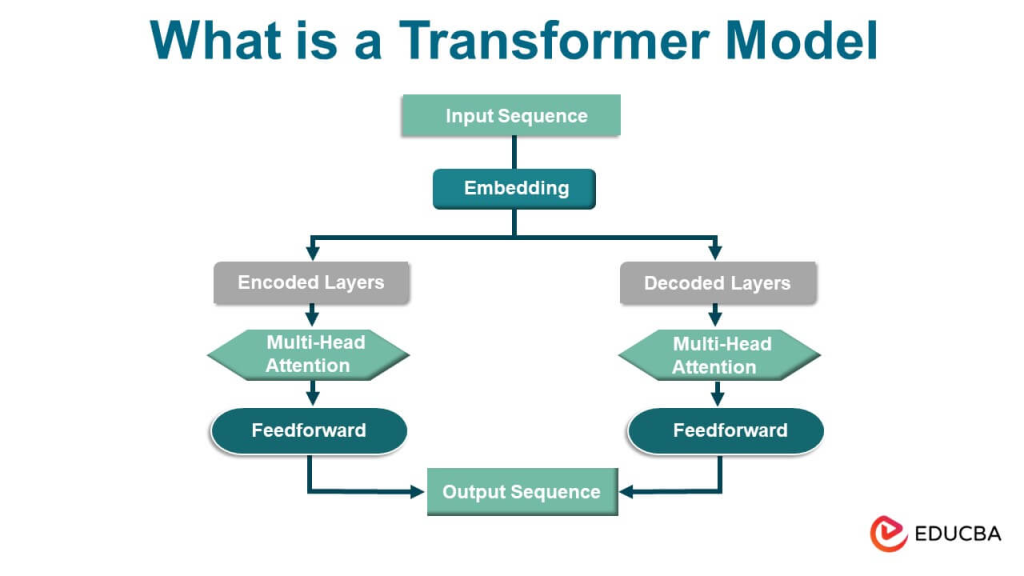

Understanding Transformer Models

Unlike previous neural network architectures, transformers rely solely on the attention mechanism. It allows them to process and generate text more efficiently. This breakthrough has revolutionized the fields of Artificial Intelligence and Natural Language Processing.

Img src: Google

The impact of transformers has been profound, leading to the development of different types of transformer models such as GPT, BERT, and T5, which have set new benchmarks in AI performance. Its key components include:

- Self-Attention Mechanism: This mechanism allows the model to weigh the importance of different words in a sentence relative to each other. Self-attention helps the model understand which parts of the input are relevant to each other, regardless of their positions in the sequence.

- Encoder-Decoder Architecture: The encoder processes the input data and converts it into a set of continuous representations. The decoder then takes these representations and generates the output, such as translated text or responses to queries.

- Positional Encoding: Since transformers do not inherently process sequences in order, positional encoding is added to the input embeddings to give the model information about the position of each word in the sequence.

- Feedforward Neural Networks: Within each layer of the transformer, feedforward neural networks are used to process the outputs of the self-attention mechanism.

Current Trends in Transformer Model Development

Have a look at the key transformer model development trends:

Scaling and Efficiency Improvements

As transformer models continue to evolve, significant strides have been made in scaling these models and improving their efficiency. Two key areas of development include techniques for scaling transformer models and innovations aimed at reducing computational requirements.

Techniques for Scaling Transformer Models

- GPT-4: OpenAI’s GPT-4 represents a significant leap in the capabilities of language models. By scaling up the number of parameters and training on vast datasets, GPT-4 can understand and generate human-like text with remarkable accuracy.

- PaLM: With an architecture designed to utilize massive amounts of data and compute power, PaLM achieves state-of-the-art performance across a variety of NLP tasks. This model demonstrates how scaling can push the boundaries of what AI can accomplish.

Transfer Learning and Fine-Tuning

By employing transfer learning and fine-tuning, developers can create highly specialized and efficient models tailored to meet the unique needs of different tasks and industries, leveraging the robust capabilities of transformer model development trends to their fullest potential.

- Pre-trained Models are models that have been trained on large datasets for a wide variety of tasks. They serve as a foundation for solving specific problems without starting from scratch.

- Tansfer Learning technique involves taking a pre-trained model and adapting it to a specific task. It leverages the knowledge acquired during the initial training phase, making it applicable to new, related tasks.

Methods for Fine-Tuning Models for Specific Tasks and Industries

- Task-Specific Fine-Tuning: Involves training a pre-trained model on a smaller, task-specific dataset to refine its performance for a particular application. This could be anything from sentiment analysis in customer reviews to medical diagnosis based on patient records.

- Layer Freezing: During fine-tuning, certain layers of the pre-trained model are kept frozen, meaning their weights are not updated. Only the final layers are retrained on the new data, preserving the general knowledge of the pre-trained model while adapting it to the new task.

- Domain Adaptation: Fine-tuning also involves adapting models to specific industries or domains, such as finance, healthcare, or e-commerce. This ensures that the model can understand and process domain-specific language and nuances effectively.

- Hyperparameter Tuning: Adjusting hyperparameters like learning rate, batch size, and the number of training epochs during fine-tuning to optimize model performance for the specific task.

- Customizing Architectures: Modifying the architecture of pre-trained models to better suit the target task. This might include adding task-specific layers or integrating additional features that are important for the specific application.

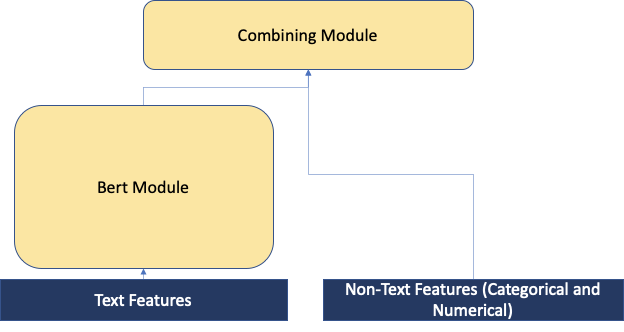

Multimodal Transformers

Multimodal transformers are designed to process and understand multiple forms of data, such as text, images, and audio, simultaneously. This capability opens up new possibilities for creating more intelligent and versatile AI systems.

Img src: Google

Integration of Different Types of Data

Traditional transformer model development trends combine textual descriptions with visual inputs and auditory signals. The integration of different types of data in transformer models is paving the way for more sophisticated and versatile AI systems.

- Text and Image Integration: By incorporating both textual and visual data, multimodal transformers can generate detailed image captions, create descriptive content from images, and even improve image recognition tasks by leveraging contextual textual information.

- Text and Audio Integration: Combining text and audio data allows multimodal transformers to excel in tasks such as speech recognition, where understanding the context provided by textual data can enhance the accuracy of transcriptions. Additionally, these models can generate audio responses to text queries, leading to more interactive and engaging user experiences.

Applications and Benefits of Multimodal Models

The ability to process multiple data types concurrently provides multimodal transformers with a unique edge in various applications across different industries.

- Enhanced Content Generation: Multimodal transformers can create more nuanced and contextually aware content by leveraging information from different data sources. For example, a model can generate a detailed article based on an image or produce a comprehensive report combining textual data and visual charts.

- Improved Human-Computer Interaction: By understanding and generating multimodal data, these models facilitate more natural and intuitive interactions between humans and machines. Virtual assistants and chatbots can provide more accurate and relevant responses by considering both spoken language and visual cues.

- Healthcare Applications: In medical diagnostics, multimodal transformers can analyze textual reports, medical images, and patient records to provide more accurate diagnoses and treatment recommendations. This holistic approach can improve patient outcomes and streamline healthcare processes.

- Creative Industries: Artists and designers can benefit from multimodal models that generate creative content by combining text prompts with visual inspiration. This can lead to innovative designs, artworks, and multimedia projects that push the boundaries of creativity.

Enhanced Interpretability

By focusing on enhanced interpretability, the AI community aims to create transformer model development trends that perform exceptionally well and provide clear and understandable insights into their decision-making processes.

Efforts to Make Transformer Models More Interpretable and Transparent

- Transparency in AI: With the increasing complexity of transformer models, there is a growing need to make these models more interpretable and transparent. This means making it easier for users to understand how the model makes decisions.

- User Trust and Compliance: Enhancing interpretability is crucial for building user trust, ensuring ethical use, and meeting regulatory requirements, especially in sensitive areas like healthcare and finance.

- Research and Development: Researchers and developers are continually working on new methods and tools to demystify the decision-making processes of transformer model development trends, making them more accessible and understandable to non-experts.

Techniques Such as Attention Visualization and Explainable AI

Attention Visualization

Attention mechanisms are at the core of transformer models, allowing them to focus on different parts of the input sequence when making predictions.

- Tools like attention heatmaps visualize which parts of the input the model is focusing on, helping users see how the model processes and prioritizes information.

- This technique is particularly useful in natural language processing tasks, where it can show which words or phrases are most influential in the model’s predictions.

Explainable AI (XAI)

XAI encompasses a range of methods aimed at making AI models’ decisions more understandable. Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) provide insights into individual predictions.

- Efforts are also being made to develop inherently interpretable models that maintain high performance while being easier to understand.

- Explainable AI is particularly important in industries where decision transparency is critical, such as legal, medical, and financial sectors.

Post-hoc Analysis

Post-hoc analysis involves examining the decisions made by a model after it has been trained. This can include generating explanations for specific predictions and understanding the model’s behavior.

Various tools and frameworks are being developed to facilitate post-hoc analysis, making it easier for developers and stakeholders to interpret model outputs.

Applications of Transformer Models Across Industries

The following advantages of transformer model development services represent the transformative potential of these models across various industries.

Img src: Google

- Healthcare

- Medical Diagnosis: Transformer model development trends analyze patient data, medical histories, and clinical notes to aid in diagnosing diseases. They can identify patterns and correlations that might be missed by human practitioners, leading to more accurate and timely diagnoses.

- Drug Discovery: AI-driven drug discovery leverages transformers to predict how different compounds will interact with biological targets. This accelerates the identification of potential new drugs and reduces the time and cost associated with traditional drug discovery processes.

- Personalized Medicine: By analyzing genetic information, lifestyle data, and health records, transformer models help create personalized treatment plans. These plans are tailored to the unique characteristics of each patient, improving treatment efficacy and patient outcomes.

- Finance

- Fraud Detection: Transformer models monitor transactions in real-time, detecting unusual patterns and potential fraudulent activities. They can analyze vast amounts of data quickly, providing robust fraud detection and prevention mechanisms.

- Algorithmic Trading: In financial markets, transformer model development trends process and analyze market data, news, and trends to inform trading strategies. Their ability to handle complex, high-frequency data makes them valuable tools for algorithmic trading.

- Customer Service: AI-powered chatbots and virtual assistants in the finance sector use transformer models to handle customer inquiries, provide financial advice, and resolve issues. This enhances customer experience by offering efficient and accurate support.

- E-commerce

- Product Recommendations: Transformer models analyze user behavior, preferences, and purchase history to deliver highly personalized product recommendations. This improves customer satisfaction and increases sales by presenting users with items they are more likely to purchase.

- Customer Support: AI chatbots in e-commerce use transformer models to handle a wide range of customer queries, from order tracking to product information. This provides timely and accurate responses, improving the overall customer support experience.

- Personalized Marketing: By understanding customer preferences and behaviors, transformer models help create targeted marketing campaigns. These campaigns are more likely to engage customers and drive conversions through personalized content and offers.

- Education

- Intelligent Tutoring Systems: Transformer model development trends power intelligent tutoring systems that adapt to individual students’ learning styles and needs. These systems provide personalized feedback, resources, and guidance, enhancing the learning experience.

- Personalized Learning Experiences: In educational platforms, transformers analyze students’ progress and performance to tailor lessons and materials. This ensures that each student receives a customized learning journey, improving engagement and outcomes.

Future Trends in Transformer Model Development

The transformer model development trends are rapidly evolving, with several exciting trends on the horizon that promise to shape the future of transformer models.

OpenAI’s GPT-4 and Beyond

As we look forward to future iterations of transformer models, the expectations for OpenAI’s GPT-4 and beyond are high.

- Innovations in Model Architecture: Future models are anticipated to incorporate advanced techniques to enhance performance and efficiency. These may include more sophisticated self-attention mechanisms, improved parameter optimization, and better handling of long-range dependencies.

- Enhanced Capabilities: Future iterations will likely offer even more powerful language understanding and generation capabilities, enabling more complex and nuanced interactions. This will open up new possibilities for applications across various domains, from healthcare to finance.

Democratization of AI

One of the significant future trends is the democratization of AI, making advanced transformer models accessible to a broader range of developers and organizations.

- Lowering Barriers to Entry: Efforts are being made to reduce the computational and financial barriers associated with training and deploying transformer models. This includes the development of more efficient models that require less computational power and initiatives to provide affordable access to high-performance computing resources.

- Open-Source Contributions: The open-source community continues to play a crucial role in democratizing AI. By sharing code, pre-trained models, and best practices, developers worldwide can collaborate and contribute to the advancement of transformer technology.

Explainability and Interpretability

As transformer models become more integral to critical applications, enhancing the transparency of model decisions and outputs is paramount.

- Improving Trust: Techniques for making model decisions more interpretable will help build trust among users and stakeholders. This includes developing methods to visualize attention patterns, provide clear explanations for model predictions, and ensure that models adhere to ethical standards.

- Regulatory Compliance: As regulations around AI usage become stricter, having interpretable models will be essential for compliance. This trend will push the development of tools and frameworks that allow for better auditing and understanding of AI systems.

Integration with Other Technologies

The future of transformer models lies in their integration with other emerging technologies, creating more comprehensive and powerful machine learning solutions.

- Blockchain Integration: Combining transformer models with blockchain technology can enhance data security and transparency, particularly in applications requiring immutable and verifiable records, such as finance and supply chain management.

- IoT and Edge Computing: Integrating transformer models with Internet of Things (IoT) devices and edge computing can enable real-time data processing and decision-making at the edge, reducing latency and improving efficiency in applications like smart cities and industrial automation.

Conclusion

As we witness the rapid advancements in transformer model in AI, it is clear that these innovations are not only transforming AI but also reshaping industries and enhancing our daily lives.

The future of transformer models promises even greater possibilities, and now is the time to be part of this exciting journey. From more efficient models to groundbreaking applications in healthcare, finance, and beyond, the potential of transformer models is immense.

So, what are you waiting for?

Hire AI engineers at ValueCoders (a leading Machine Learning development company in India) who use cutting-edge technologies to harness the power of AI and build world-class solutions for you.