After Google, Meta also ends up in the eye of the storm for the errors made by artificial intelligence, and again for incorrect racial representations

After the case of Google, also the image generator of Meta AI found himself in the midst of controversy due to his inability to produce accurate images despite clear prompts.

Also in this case, the ethnic element is at the center of the story, with the limitation that this time seems to concern mixed couples between Asians and Caucasians.

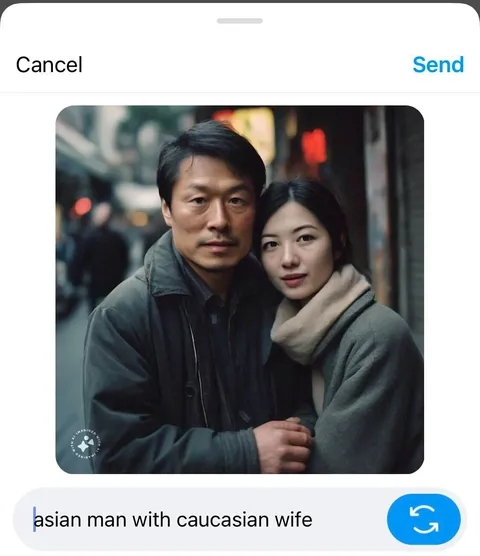

As highlighted by various sources, despite requests and repeated changes to prompts, it appears that creating an image of an Asian man next to a white woman is too difficult a task for Meta's AI, and this continued difficulty of systems, when it comes to generating content that touches on topics of this kind, leaves more and more room for doubts, generating heated debate.

Impossible couples

The report reveals that the request to generate images was directed to Meta's AI in the context of Instagram; the prompts were pretty simple, like “Asian man and Caucasian friend” or “Asian man and white wife.”

This would not be an isolated case, but an episode that occurred several times.

The Verge, reporting its experience with the image generator, speaks of only one success out of ten attempts with the prompt “Asian woman with Caucasian husband“.

However, even in this successful case there were some inaccuracies, as the generated image featured an older man and a young woman with a light skin tone.

Engadget also conducted similar tests, finding the same proportion of one exact image in ten.

The outlet said the other nine generated showed white faces, with the AI often adding culturally specific clothing even if not mentioned in the prompt.

Repeated inaccuracies

It is not yet clear why Meta AI is failing to replicate certain prompts.

It must also be said that this is not the first time that a tool with artificial intelligence from Meta has hit the news for these reasons.

Last year, its AI sticker generation tool came under fire for creating NSFW images and Nintendo characters with guns.

Additionally, this is not the first case of an AI tool failing to create images related to race and gender.

Recall when, in February, Google suspended its Gemini AI image generation tool after criticism for inaccurate image creation for the same reason.

For now, Meta has provided no explanation for the inaccurately generated images by the AI, merely pointing out that the tools are still in beta and could make errors.

#Gemini39s #troubles #Meta39s #impossible #create #couple #ethnicities