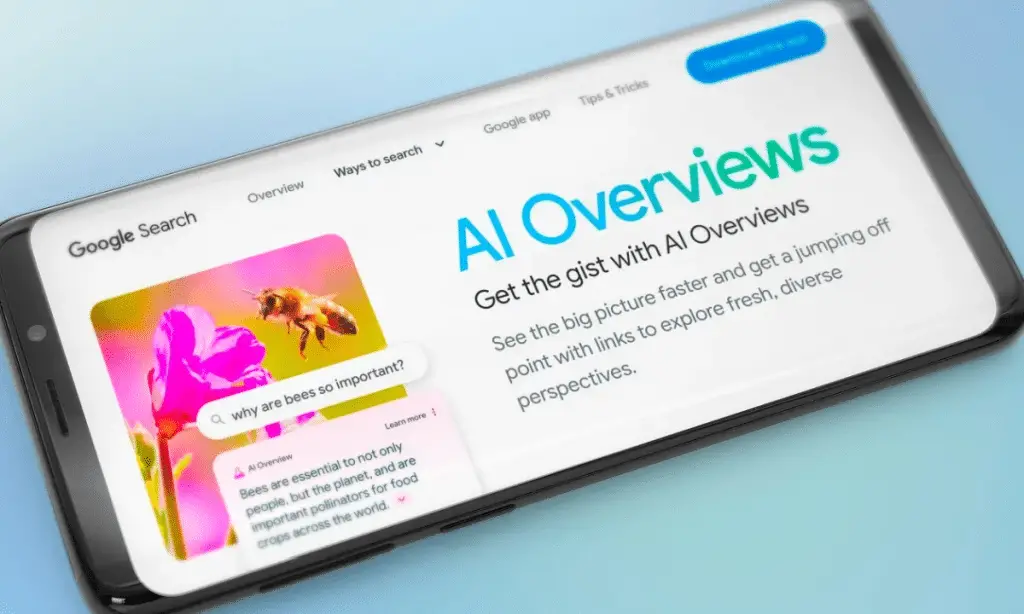

Google recently implemented a significant update to its search engine, introducing an artificial intelligence (AI)-based tool, known as “AI Overviewyes”.

This experimental feature was designed to browse the web and summarize search results using the Gemini AI model, however despite its innovative intentions, the new feature has raised a number of concerns and doubtshighlighted by various incidents reported on social media and in the news.

AI Overviews reportedly has provided users with somewhat questionable advice, among these, the invitation to eat rocks, add glue to pizzas and use chlorine gas to clean washing machines. These suggestions, bordering on the absurdhave caused quite a bit of amazement and fueled a heated debate on role and reliability of artificial intelligence in our daily lives.

In one particularly alarming case, the AI appeared to suggest jumping from the Golden Gate Bridge to a user who was expressing feelings of depression, a type of response that is clearly inappropriate and potentially dangerousespecially for those looking for help and support.

How AI Overviews works

AI Overviews works by analyzing the web to condense search results into concise summaries, with Google introducing this feature during the I/O developer conferencemaking it initially available to a select group of users in the United States, while regarding the global releasethis is scheduled for the end of the year.

Despite its potential, AI Overviews has not been free from errors, as users have reported cases in which the AI generated summaries based on articles from the satirical site The Onion or humorous posts from Reddit.

We saw an example a little while ago, in response to a question about pizza, the AI would have recommended adding about ⅛ of a cup of non-toxic glue to the sauce to increase its adhesion, following what appears to be a old Reddit joke dating back ten years ago.

Other inaccuracies attributed to AI Overviews include claims that Barack Obama is Muslim, that founding father John Adams was a 21-time graduate of the University of Wisconsin, and that a dog played in the NBA, NHL and NFL.

Google representatives responded to the concerns, pointing out that Most AI Overviews provide high-quality informationwith links to learn more, and ensure that extensive testing was conducted prior to the launch of this new experience to meet their rigorous quality standards.

While isolated incidents have occurred, Google continues to refine its systems, but this is not the first time that generative AI models have shown “hallucinations,” making up fictitious information.

In one notable example, ChatGPT created a sexual harassment scandal by falsely implicating a real law professor and citing nonexistent newspaper reports as evidence.

As AI technology evolves, it is It is essential to find a balance between innovation and accuracywhile AI Overviews is promising, it is essential to remain vigilant and ensure that its outputs are aligned with reliable information, after all, even AI can have its bizarre moments.

The promise of Artificial Intelligence

Artificial intelligence is one of the most promising technologies of our time, and its applications range from virtual assistants to autonomous vehicles, from medical diagnosis to machine translation.

Google, with its vast experience in the field, has sought to bring AI to the center of our online search experience, however as AI Overviews demonstrates, even the best intentions can stumble into unexpected situations.

The main challenge for any synthesis or summary tool is ensure the accuracy and quality of the information providedand AI Overviews appears to have struggled in this regard.

Its ability to generate content based on satirical sources or comical posts is worrying, this is because users rely on search results for reliable informationand when AI introduces incorrect or misleading elements, it creates a trust problem.

Google has a great responsibility in ensuring that its features are useful and reliable, and although Google representatives have stated that the erroneous examples are “generally very unusual queries and not representative of most people’s experiences”, it is important to consider that the AI must be able to handle even unusual situations.

Verification of sources and consistency of responses are key to maintaining the integrity of the service, which raises important questions about the training and oversight of such models: How can we ensure that AI does not become a vehicle for disinformation?

In conclusion, AI Overviews represents a step forward in the field of information synthesis, but requires constant attention to improve the quality of answers, and Google should continue to refine its system, involving experts and users to ensure that AI remain a reliable ally in our daily research.

If you are attracted by science or technology, continue to follow us, so you don’t miss the latest news from all over the world!

#Overviews #sides #coin